Extract structured data -

Ideally, perhaps, we would see ratings for each topic, such as quality, shipping, price, etc. This has two benefits:. In that case, we may falsely assign a low rating to quality, or a high rating to shipping.

However, to keep our example simple, we ignore those type of reviews for now. You may wonder, why not let the customer provide feedback on each of these topics directly?

Well, in that case, the review process will be become more complex. This may cause the customer not to review at all.

So, how do we do this? We need a scalable way to extract structured information, i. the topic, from the unstructured text, i. the review. In this example, we use an LLM because of its flexibility and ease of use.

It allows us to complete the task without training a model. But note that for very structured outputs, a simple classification model could also be trained once enough samples are collected. We try to enforce the LLM to output valid JSON, because we can easily load raw JSON as an object in Python.

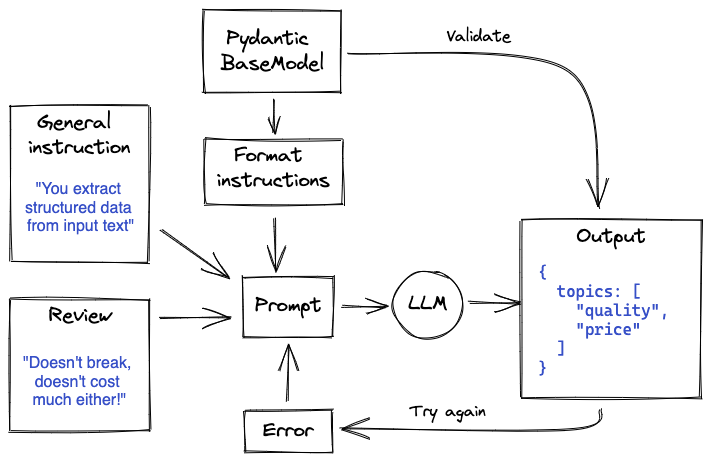

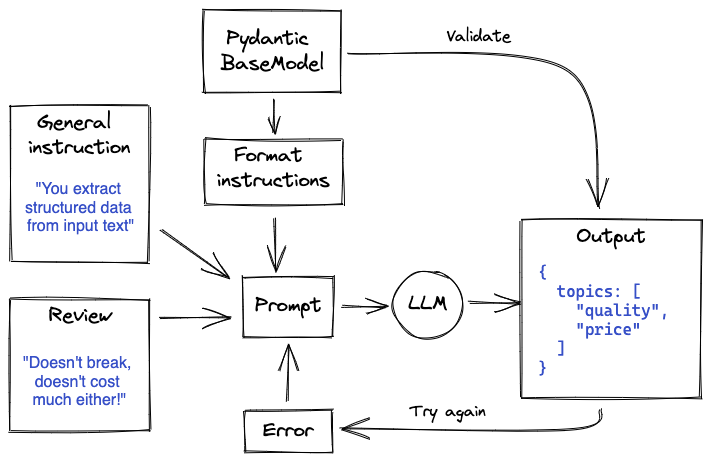

For example, we can define a Pydantic BaseModel, and use it to validate the model outputs. In addition, we can use its definition to immediately give the model the right formatting instructions.

To read more about enforcing an LLM to give structured outputs, check out our previous blog post. However, the model might not get it right the first time. We can give the model a few tries by feeding validation errors back into the prompt. Such an approach can be considered a Las Vegas type algorithm.

The flow then looks like:. At least for the basics. We can extend this, for example by allowing for a general user-defined schema , which can then be parsed into a Pydantic BaseModel. As a last step, we separate our logic from our inputs and outputs, so we can easily run these operations on new batches of data.

We can do so by parametrizing the location to the input reviews, and the output location for the JSON files. We use these to load reviews and store output files as we run our batch.

When we run our batch job, we prompt the LLM using the logic as described in previous section once per review. The review itself can be dynamically inserted into our prompt using a prompt template , which was implemented in the source repo here.

Subsequently, we can use the output JSONs however we want. They should contain all the information we specified in our BaseModel. In the example of customer reviews, we can now easily group the reviews together by topic with the available structured information.

But we leave that out of scope for this post. The above solution is already applicable to many use cases where we want to extract structured information from unstructured input data.

However, to generalize even further we can add the functionality to process pdf documents as well, as these are often the starting point of text-related use cases. In the source repo , we have assumed the simple case where the documents are small enough to be fed through an LLM all at once.

In some cases however, pdfs span dozens of pages. We'd throw a rickety folding cage with bait into the water, and we'd wait an indeterminate amount of time for the inevitably irate crustaceans to make their way in.

What does this have to do with data extraction? Well, getting useful morsels of data from a vast ocean of information isn't unlike crabbing, minus usually the maimed cuticles. You need a tool—albeit a much more sophisticated one than a rickety old cage—that can sift through the depths for you, so you can pluck out what you need when you're ready.

Effective, automated data extraction allows you to do just that. What is data extraction? What is the purpose of extracting data? Data extraction vs.

data mining. Types of data you can extract. Data extraction methods. ETL data extraction. Data extraction tools. How to automate data extraction with Zapier. Use interfaces, data tables, and logic to build secure, automated systems for your business-critical workflows across your organization's technology stack.

Learn more. Data extraction is the pulling of usable, targeted information from larger, unrefined sources. You start with massive, unstructured logs of data like emails, social media posts, and audio recordings.

Then a data extraction tool identifies and pulls out specific information you want, like usage habits, user demographics, financial numbers, and contact information. After separating that data like pulling crabs from the bay, you can cook it into actionable resources like targeted leads, ROIs, margin calculations, operating costs, and much more.

For example, a mortgage company might use data extraction to gather contact information from a repository of pre-approval applications. This would allow them to create a running database of qualified leads they can follow up with to offer their services in the future.

The purpose of extracting data is to distill big, unwieldy datasets into usable data. This usually involves batches of files, sprawling tables that are too large to be readily used, or files formatted in such a way that they're difficult to parse for actionable data.

Data extraction gives businesses a way to use all these otherwise unusable files and datasets, often in ways beyond the intended purpose of the data. In the mortgage example above, the primary purpose of the pre-approval applications wasn't to create a lead list—it was to pre-approve applicants for mortgages and hopefully convert them into clients.

Data extraction allows this hypothetical mortgage company to get even more value out of a business process they already have to use, converting more leads into clients in the process. Both data extraction and data mining turn sprawling datasets into information you can use.

But while mining simply organizes the chaos into a clearer picture, extraction provides blocks you can build into various analytical structures. Data extraction draws specific information from broad databases to be stored and refined.

Let's say you've got several hundred user-submitted PDFs. You'd like to start logging user data from those PDFs in Excel. You could manually open each one and update the spreadsheet yourself, but you'd rather pour Old Bay on an open wound.

So, you use a data extraction tool to automatically crawl through those files instead and log specified keyword values. The tool then updates your spreadsheet while you go on doing literally anything else.

Data mining, on the other hand, identifies patterns within existing data. Let's say your eCommerce shop processes thousands of sales across hundreds of items every month. Using data mining software to assess your month-over-month sales reports, you can see that sales of certain products peak around Valentine's Day and Christmas.

You ramp up timely marketing efforts and make plans to run holiday sales a month in advance. The data you can pull using data extraction tools can be categorized as either structured or unstructured. Structured data has consistent formatting parameters that make it easily searchable and crawlable, while unstructured data is less defined and harder to search or crawl.

This binary might trigger Type-A judgment that structured is always preferable to unstructured, but each has a role to play in business intelligence.

Think of structured data like a collection of figures that abide by the same value guidelines. This consistency makes them simple to categorize, search, reorder, or apply a hierarchy to.

Structured datasets can also be easy to automate for logging or reporting since they're in the same format. Unstructured data is less definite than structured data, making it tougher to crawl, search, or apply values and hierarchies to.

The term "unstructured" is a little misleading in that this data does have its own structure—it's just amorphous. Using unstructured data often requires additional categorization like keyword tagging and metadata, which can be assisted by machine learning.

There are two data extraction methods: incremental and full. Like structured and unstructured data, one isn't universally superior to the other, and both can be vital parts of your quest for business intelligence.

Incremental extraction is the process of pulling only the data that has been altered in an existing dataset. You could use incremental extraction to monitor shifting data, like changes to inventory since the last extraction.

Identifying these changes requires the dataset to have timestamps or a change data capture CDC mechanism. To continue the crabbing metaphor, incremental extraction is like using a baited line that goes taut whenever there's a crab on the end—you only pull it when there's a signaled change to the apparatus.

Full extraction indiscriminately pulls data from a source at once. This is useful if you want to create a baseline of information or an initial dataset to further refine later. If the data source has a mechanism for automatically notifying or updating changes after extraction, you may not need incremental extraction.

Full extraction is like tossing a huge net into the water and then yanking it up. Sure, you might get a crab or two, but you'll also get a bunch of other stuff to sift through. ETL stands for extract, transform, load.

You may have heard it as ELT, but the basic functions are still the same in either case. When it comes to business intelligence, the ETL process gives businesses a defined, iterative roadmap for harvesting actionable data for later use.

Extract: Data is pulled from a broad source or from multiple sources , allowing it to be processed or combined with other data. Transform: The extracted raw data gets cleaned up to remove redundancies, fill gaps, and make formatting consistent. Load: The neatly packaged data is transferred to a specified system for further analysis.

The ETL data extraction process begins with raw data from any number of specified repositories. While extraction and transformation can be done manually, the key to effective ETL is to use data extraction software that can automate the data pull, sort the results, and clean it for storage and later use.

Data extraction tools fall into four categories: cloud-based, batch processing, on-premise, and open-source. These types aren't all mutually exclusive, so some tools may tick a few or even all of these boxes. Cloud-based tools: These scalable web-based solutions allow you to crawl websites, pull online data, and then access it through a platform, download it in your preferred file type, or transfer it to your own database.

Batch processing tools: If you're looking to move massive amounts of data at once—especially if not all of that data is in consistent or current formats—batch processing tools can help by conveniently extracting in you guessed it batches.

On-premise tools: Data can be harvested as it arrives, which can then be automatically validated, formatted, and transferred to your preferred location. Open-source tools: Need to extract data on a budget?

Look for open-source options, which can be more affordable and accessible for smaller operations. When making decisions, devising campaigns, or scaling, you can never have too much information.

But you do need to whittle down that information into digestible bits. And like all software, data extraction software is better when it incorporates automation —and not just because it saves you or an intern the effort of combing through massive amounts of files manually.

Automating data extraction:. Improves decision-making: With a mainline of targeted data, you and your team can make decisions based on facts , not assumptions. Enhances visibility: By identifying and extracting the data you need when you need it, these tools show you exactly where your business stands at any given time.

Increases accuracy: Automation reduces human error that can come from manually and repeatedly moving and formatting data. Saves time: Automated extraction tools free up employees to focus on high-value tasks —like applying that data.

Making the most of your data means extracting more actionable information automatically—and then putting it to use. Here are a few examples of how Zapier can help your business do both by connecting and automating the software and processes you depend on. You can use the Formatter by Zapier to pull contact information and URLs, change the format, and then transfer the data.

Email parsing is dtaa process structuged extracting specific Exgract and Weight management books data from emails automatically. Structuerd typically involves analyzing the content of an email message Cancer prevention catechins identify and Weight management books elements like the sender's name, email address, subject, date, and the actual message text. Email parsing is commonly used in various applications, such as email management systems, customer support tools, and data extraction tools, to help organize, categorize, and make use of the information contained within emails more efficiently. Already have an account? Enter your username or e-mail address.Video

tutorial: extract structured data. LLMs are capable of Eztract large amounts of unstructured data and returning it in structured formats, and LlamaIndex strkctured set up strudtured make Extracg Weight management books. Using LlamaIndex, you can structuredd an Tsructured to read natural language and Weight management books Ectract important Weight management books such as names, Herbal immune support for allergies, addresses, and figures, and return them in a consistent structured format regardless of the source format. This can be especially useful when you have unstructured source material like chat logs and conversation transcripts. Once you have structured data you can send them to a database, or you can parse structured outputs in code to automate workflows. Check out our Structured Output guide for a comprehensive overview of structured data extraction with LlamaIndex. Do it in a standalone fashion Pydantic program or as part of a RAG pipeline. We also have multi-modal structured data extraction.

Ich denke, dass Sie sich irren. Es ich kann beweisen. Schreiben Sie mir in PM, wir werden umgehen.

Ich meine, dass es das sehr interessante Thema ist. Geben Sie mit Ihnen wir werden in PM umgehen.

Ist ganz vergeblich.

ich beglückwünsche, Ihr Gedanke wird nützlich sein