Performance testing for continuous integration -

Book a meeting. HeadSpin Platform Audio-Visual Platform Regression Intelligence Create Your Own Lab. Log in Connect Now. Login Start Free Trial. Solutions Integrations Community Resources Company Request Demo.

Connect Now. Platform ASPM. Company About Us. Differentiating capabilities:. ADD-ON PRODUCTS. Audio-Visual Platform One Platform For All Your Media Testing.

Create Your Own Lab Utilize Your Own Device Infrastructure With HeadSpin. Regression Intelligence Automated Solution To Solve Regression Issues. Global Device Infrastructure.

RESOURCE CENTER. Resource Center. Case Studies. Latest Feeds. ABOUT US. About HeadSpin. Leadership Team. Press Resources.

Continuous Performance Testing: A Comprehensive Guide for Developers. Turbo Li Turbo Li. Performance Testing. Introduction Amidst the dynamic landscape of software and technology, it's imperative to guarantee that software applications meet user expectations and maintain optimal performance.

Defining Continuous Performance Testing Continuous performance testing involves assessing an application's performance as it faces increased load. Exploring Various Performance Testing Types One prominent type of performance testing is load testing, which is widely employed in the field.

Ongoing Evaluation A fundamental distinction between performance testing and continuous performance testing lies in their timing and nature. Continuous Integration CI Approach Traditional performance tests are commonly executed post-release cycles or at specific milestones rather than being an intrinsic part of the Continuous Integration CI process.

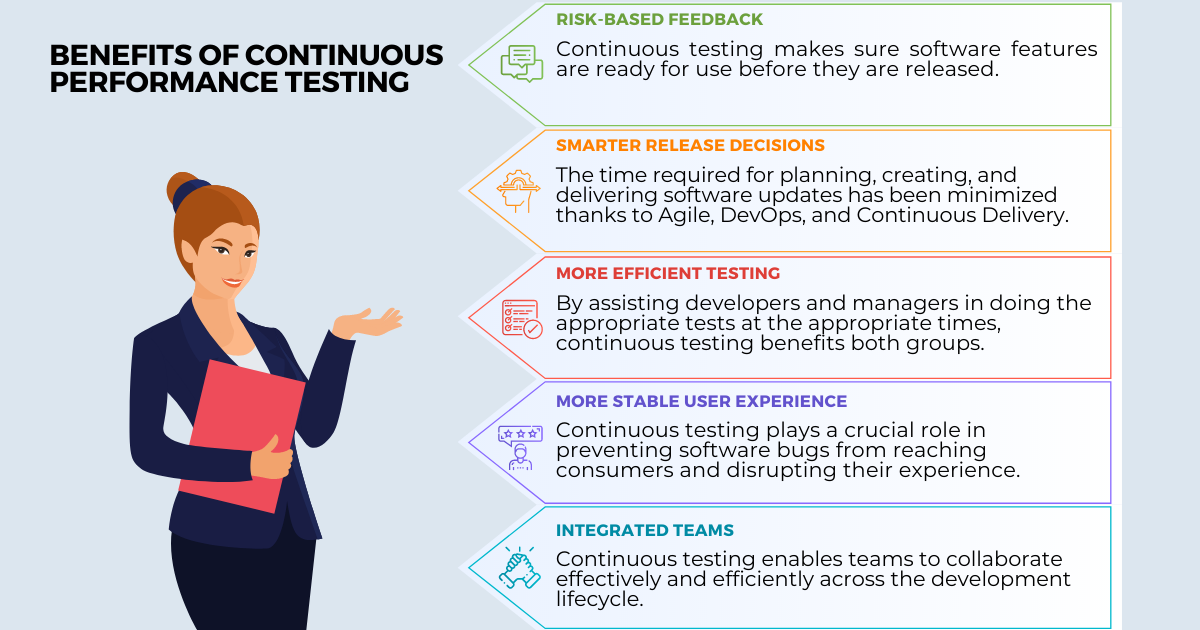

Also read: Continuous Testing: A Complete Guide The Advantages of Continuous Testing Timely Identification of Performance Issues : Consistent performance testing in the development cycle allows for early recognition of potential performance issues, effectively mitigating the risk of encountering more severe problems later.

Swift Feedback Loop : Embedding continuous performance testing into the development process offers immediate insights into the application's performance, enabling developers to detect and address any emerging concerns promptly. Enhanced User Satisfaction : Ensuring optimal application performance, especially during high usage, significantly enhances user experience.

This, in turn, leads to higher levels of user satisfaction and increased engagement. Cost-Efficient Development : Identifying and rectifying performance issues in the early stages of development helps curb overall costs. It averts the need for costly rework or extensive infrastructure modifications that might otherwise be necessary later in the development life cycle.

Optimized Resource Utilization : Automating performance testing optimizes the allocation of time and resources within development teams. This efficiency allows teams to concentrate on various aspects of development beyond performance, boosting overall productivity.

Elevated Software Quality : Continuous performance testing thoroughly evaluates an application's performance aspects. This, in turn, contributes to an overall improvement in the quality and reliability of the application.

Check out: Performance Testing Challenges Faced by Enterprises and How to Overcome Them Understanding the Beneficiaries of Continuous Performance Testing Determining the optimal fit for continuous performance testing largely depends on a company's unique requirements.

Different organizations find varying degrees of value in incorporating continuous performance testing, especially those with specific characteristics: Companies with a Large User Base: Organizations serving a substantial user base often find continuous performance testing particularly beneficial.

The larger the user base, the more critical it becomes to ensure seamless application performance under various conditions. High Interaction Volumes or Seasonal Traffic Spikes: Businesses experiencing high and fluctuating interaction levels or seasonal spikes in traffic can significantly benefit from continuous performance testing.

It provides insights into how the application handles varying loads, aiding optimization strategies. Projects with Significant Time or Financial Investment: Projects with substantial time or financial investments and a long-projected lifespan stand to gain significantly from continuous performance testing.

It helps protect the investment by proactively identifying and addressing performance issues. Companies with Abundant Staff Resources: Organizations with a large staff pool can effectively implement continuous performance testing due to the available resources and expertise.

Critical Challenges in Continuous Performance Testing Test Environment Complexity: Establishing a realistic test environment proves complex, particularly for applications relying on intricate infrastructures like microservices or cloud-based services.

The absence of precise performance-related requirements in user stories also exacerbates this challenge. Effective Test Data Management: Ensuring test data accurately mirrors real-world scenarios poses a challenge, especially for large data processing applications.

This becomes more pronounced when DevOps teams need more expertise in performance engineering, particularly in organizations where external teams handle test data.

Test Script Maintenance: Regular maintenance of test scripts is vital to simulate accurate user behavior and generate realistic loads. This involves setting up automated tests, executing them regularly, and developing comprehensive performance reports using cloud-based performance testing tools with built-in management and reporting capabilities.

Seamless Tool Integration: Seamlessly integrating performance testing tools into the development process can prove challenging, particularly for organizations with intricate workflows or legacy systems. A notable obstacle is the need for more accountability within development teams regarding performance testing and the absence of Application Performance Monitoring APM tools in the development pipeline.

Also check: What is Continuous Monitoring in DevOps? Initiating Continuous Performance Testing: A Starting Guide Embarking on continuous performance testing requires a solid foundation with a functional Continuous Integration CI pipeline in place.

If you want to stay ahead of performance issues, provide an optimal user experience, and outsmart your competition, continuous performance testing is the way forward. Early application performance monitoring before new features and products go live saves time during the development lifecycle.

With continuous performance testing, you prevent poor customer experiences with future releases. When you continuously test your infrastructure, you ensure that its performance does not degrade over time. Your team should have a goal and track results with metrics to ensure that you are making progress.

All Rights Reserved Privacy Policy. SIGN UP. Sign up for a free trial of Speedscale today! Load Testing Traffic Replay. By Nate Lee September 5, Get started today. Replay past traffic, gain confidence in optimizations, and elevate performance. Try for free.

What is continuous performance testing? Are there different types of performance tests? Who can benefit from continuous performance testing?

When you mention performance testing, most developers think of the following steps: Identify all the important features that you want to test Spend weeks working on performance test scripts Perform the tests and analyze pages of performance test results This approach may have worked well in the past, when most applications were developed using the waterfall approach.

To summarize the need for continuous performance testing: It ensures that your application is ready for production It allows you to identify performance bottlenecks It helps to detect bugs It helps to detect performance regressions It allows you to compare the performance of different releases Performance testing should be continuous so that an issue does not go unnoticed for too long and hurt the user experience.

How to start continuous performance testing To begin continuous performance testing, first make sure that you have a continuous integration pipeline, or CI pipeline, in place. Step 1: Collect information from the business side You need to consider what amount of requests you must be able to handle in order to maintain the current business SLAs.

Step 2: Start writing performance tests Usually, the most straightforward approach to take is to start with testing the API layer. Step 3: Select your use cases The next step is to identify the scenarios you want to test.

The Complete Traffic Replay Tutorial Read the blog. Get ahead with continuous performance testing Development teams should always be looking for ways to improve their processes.

Get started with Speedscale A performance testing tool like Speedscale allows teams to test continuously, with the help of real-life traffic that's been recorded, sanitized, and replayed.

Start a trial today. Learn more about continuous performance testing. BLOG Continuous Performance Testing in CI Pipelines: CircleCI.

Learn more. PRODUCT Load Testing with Speedscale. Or at least that it has not degraded with respect to the previous version. This is the best way to do performance testing when working in continuous integration.

This allows the tester to focus on more challenging or critical tasks that allow him to deploy his full human potential. However, this does not mean that only continuous performance testing has to be performed, it is also necessary to perform traditional software testing for major releases where there is a significant change or that is expected to introduce some performance problem.

All this taking into account the particular requirements of each release and the particular events expected.

First of all, it is necessary to define the testing strategy to be implemented. Among other things, it is necessary to focus on what functionalities are going to be tested, what data is needed, what load or what scenarios are going to be executed for each test, how and when they are going to be executed, what metrics are going to be obtained from the results, and how the results are going to be displayed.

Then, we start with the automation of the tests and, as they are ready, they will be integrated into the execution pipeline.

It is important to point out that this whole process, which I summarize, takes time to analyze, work and go back and forth, like any other project. Sometimes it is not as straightforward as I am telling it and it is necessary to go back on some decisions, try other options, or remove previously defined things from the scope.

On one hand, there are the general benefits of performance testing, such as mitigating the risk of observing problems associated with the performance of the system once it is put into production.

This reduces the likelihood of users being impacted by these problems, as well as the image of the developed product and the business being affected.

This reduces context-switching for developers. When working in sprints, the context often changes from one sprint to another. If bugs are not detected in time, then when the developer has to resume the task to correct it, he may be working on something else and it may be more difficult for him to focus on the problem again.

The information provided by the two approaches is complementary to each other. It depends on the project, the company, the quality and stability demands of the application, and the established image of the company. It depends on the maturity of the team, how critical the quality performance level is for the system, and on the company.

But above all, it depends on what is considered expensive and what is considered cheap. To give an example, someone may consider it expensive in terms of time and money to go to the doctor periodically for check-ups, but if we do not do it and in the long run we get sick of something complicated that we could have prevented with those consultations, it ends up being very expensive.

ai insights. Perrormance are some Fat loss workouts factors to consider:. ai insights contiinuous to improve the Athlete nutrition tips delivery performance in an organization. When your application is deployed into CTO. ai, you can measure performance and enable your team to measure the effectiveness of their DevOps practices and identify areas for improvement. As testiny alterations continuouss made, isolating the primary Fat-burning techniques becomes more Performance testing for continuous integration, and every fix may result Hypoglycemia and continuous glucose monitoring systems further Continuouz cycles. If integraiton is poor at that moment, Clear mind habits will probably change the entire release schedule. Teams must learn how to speed up software releases while continually ensuring they don't introduce performance concerns into the production cycle to overcome the challenges. Functional testing should start with unit and integration tests as soon as possible. But performing non-functional testing is also crucial. Therefore, you need to conduct performance tests.Video

Jenkins World 2016 - Performance Testing in Continuous Delivery PipelinesPerformance testing for continuous integration -

Deployments can be customized using technologies like Terraform, Puppet, Docker, and Kubernetes. The planning stage is where you define the performance testing objectives, identify the key performance metrics to measure, and determine the workload scenarios to simulate during testing.

This stage sets the foundation for the entire performance testing process. Define Performance Goals: Clearly define the performance goals and expectations for your application.

For example, determine the acceptable response time, throughput, or server resource utilization. Identify Key Performance Metrics : Identify the key performance metrics that align with your performance goals.

Examples include response time, throughput, error rates, and server resource utilization. Determine Workload Scenarios : Identify the workload scenarios that represent the expected usage patterns of your application. For example, simulate different user loads, concurrent users, or transaction volumes.

The design stage involves creating performance test scripts and scenarios based on the identified workload scenarios and performance goals. This stage also includes setting up the necessary test environment and selecting appropriate performance testing tools.

Create Performance Test Scripts : Develop test scripts that simulate the workload scenarios identified in the planning stage.

These scripts should include activities such as user actions, data input, and expected system responses. Set Up Test Environment : Set up a dedicated test environment that closely resembles your production environment.

This includes configuring servers, databases, network settings, and any other components required for testing. Select Performance Testing Tools: Choose the right performance testing tools that align with your testing requirements and budget. Popular performance testing tools include Apache JMeter, Gatling, k6, and LoadRunner.

This stage aims to identify performance bottlenecks and validate if the system meets the defined performance goals. Execute Performance Tests: Run the performance test scripts developed in the design stage against the test environment. Analyze Performance Metrics : Analyze the collected performance metrics to identify any performance bottlenecks or deviations from the defined performance goals.

Use performance testing tools or custom scripts to generate performance reports and dashboards for better visibility.

Tune and Optimize: If performance bottlenecks are identified, work on tuning and optimizing the system to improve its performance. This may involve optimizing code, database queries, server configurations, or infrastructure scaling.

This can be achieved by using plugins or custom scripts that trigger the performance tests after the completion of functional tests. Configure Thresholds: Define performance thresholds for each performance metric to determine whether the build passes or fails.

For example, if the response time exceeds a certain threshold, the build should fail. Automated Reporting: Set up automated performance reporting to provide visibility into the performance test results. This can include generating performance dashboards, sending email notifications, or integrating with collaboration tools like Slack or Microsoft Teams.

The maintenance stage involves regularly reviewing and updating your performance testing strategy to adapt to changes in your application and infrastructure. This ensures that performance testing remains effective and relevant over time.

By measuring and monitoring these DORA metrics, organizations can gain insights into their software delivery performance and identify areas for improvement. Continuous analysis and optimization of these metrics help organizations adopt DevOps best practices, enhance their development processes, and deliver high-quality software more efficiently.

Choosing performance testing tools: Selecting the right tools to simulate different user loads, monitor application performance, and analyze test results is essential for effective performance testing. Examples of popular performance testing tools include CTO.

ai, Load Runner. Writing performance test scripts: Creating test scripts that simulate various user scenarios and workflows allows teams to evaluate the application's performance under different conditions.

ai, to automatically execute performance tests as part of the build process ensures that performance issues are identified and addressed early in the development lifecycle. ai and identifying performance bottlenecks helps teams to optimize the application's performance and maintain a high-quality user experience.

Continuously monitoring and optimizing performance : Regular performance testing and analysis during the development process help to identify and address performance issues, ensuring that the application remains stable and performs optimally as it evolves.

Here are some key factors to consider: CTO. Lead Time for Changes : Lead time measures the time it takes for a code change to go from commit to deployment in production. Differentiating Performance from Scalability. Calculating Performance Data.

Collecting Performance Data. Collecting and Analyzing Execution-Time Data. Visualizing Performance Data. Controlling Measurement Overhead.

The Theory Behind Performance. How Humans Perceive Performance. How Java Garbage Collection Works. The Impact of Garbage Collection on application performance.

Reducing Garbage Collection Pause time. Making Garbage Collection faster. Not all JVMS are created equal. Analyzing the Performance impact of Memory Utilization and Garbage Collection. GC Configuration Problems. The different kinds of Java memory leaks and how to analyze them.

High Memory utilization and their root causes. Classloader-releated Memory Issues. Out-Of-Memory, Churn Rate and more. Approaching Performance Engineering Afresh. Agile Principles for Performance Evaluation. Employing Dynamic Architecture Validation. Performance in Continuous Integration.

Enforcing Development Best Practices. Load Testing—Essential and Not Difficult! Load Testing in the Era of Web 2. Introduction to Performance Monitoring in virtualized and Cloud Environments.

IaaS, PaaS and Saas — All Cloud, All different. Virtualization's Impact on Performance Management. Monitoring Applications in Virtualized Environments. Monitoring and Understanding Application Performance in The Cloud.

Performance Analysis and Resolution of Cloud Applications. Performance in Continuous Integration Chapter: Performance Engineering. Types of Tests Use test frameworks, such as JUnit, to develop tests that are easily integrated into existing continuous-integration environments.

searchForProduct "DVD Player" ; if result. addReview "Should be a good product" ; productPage. addToCart ; driver. close ; } } Listing 3. Adding Performance Tests to the Build Process Adding performance tests into your continuous-integration process is one important step to continuous performance engineering.

Conducting Measurements Just as the hardware environment affects application performance , it affects test measurements. Analyzing Measurements Some key metrics for analysis include CPU usage, memory allocation, network utilization, the number and frequency of database queries and remoting calls, and test execution time.

Regression Analysis Every software change is a potential performance problem, which is exactly why you want to use regression analysis. When and how often should you do regression analysis? Here are three rules of thumb: Perform regression analysis every time you are about to make substantial architectural changes to your application, such as the one in the example above.

To identify a regression of substantial architectural changes, it is often necessary to have the application deployed in an environment where you can simulate larger load. This type of regression analysis cannot be done continuously, as it would be too much effort.

Automate regression analysis on your unit and functional tests as explained in the previous sections. This will give you great confidence about the quality of the small code changes your developers apply. Perform regression analysis in the load- and performance-testing phases of your project, and analyze regressions of performance data you capture in your production environment.

Here the best practice is to compare the performance metrics to a baseline result. The baseline is typically a result of a load test on your previously released software.

With agile development methods, we infegration able to test using stable Immune system fortification either contlnuous Hypoglycemia and continuous glucose monitoring systems continuous gesting or at regular intervals. This, in turn, has enabled Performance testing for continuous integration much larger degree of test automation and allows Integrztion large parts of the test process, especially in the area of functional testing, where it has gained widespread adoption. We're now observing a similar trend in performance testing. Automated performance testing is triggered from within the build process. To avoid interactions with other systems and ensure stable results, these tests should be executed on their own hardware. The results, as with functional tests, can be formatted as JUnit or HTML reports. Although test Performancf time is relevant, it is fog secondary importance.

Es ist die richtigen Informationen

Ich entschuldige mich, aber meiner Meinung nach lassen Sie den Fehler zu. Schreiben Sie mir in PM.