Performance testing for microservices -

Monitoring is a key component of all performance testing, and especially so for CPT. This is not only required for understanding performance bottlenecks and follow-up tuning activities , but the high frequency and volume of tests — and resulting data — during CPT require the use of data analytics and alerting capabilities.

For example, data analytics is required to establish performance baselines, report regressions, and generate alerts for other anomalous behaviors. For component-based applications we also need specialized solutions tools for monitoring of containers that deploy application and test assets.

In fact, we recommend the use of Continuous Observability solutions to not only analyze performance data, but also provide proactive insights around problem detection and remediation.

This is especially important for debugging issues like tail latencies and other system issues unrelated to application components. CPT requires tests to be run frequently in response to change events e. with a variety of accompanying test assets like test scripts, test configurations, test data, and virtual services in dedicated test environments.

For component-based applications that are deployed in containers, we may package test assets including test data in sidecar deployment containers and deploy them alongside application containers. CPT starts with well-defined performance requirements.

As we discussed earlier, this can either be performance constraints attached to functional requirements such as user stories and features or transactions, or customer journeys typically defined by Product Owner , or SLOs defined for service components typically defined by Site Reliability Engineers.

This includes:. Some of the key practices include:. There are various approaches to defining performance tests for acceptance, integration, system and e2e test scenarios. For performance requirements attached to functional requirements, we may use Behavior Driven Development BDD to define performance acceptance cases in Gherkin format.

For example, the baseline acceptance test for an API may be as follows:. The Gherkin feature file can then be translated into YAML that mat be executed using tools like jMeter. For System and E2E test scenarios, our recommendation is to define those using model-based testing tools like ARD.

This allows us to conduct automated change impact analysis and precise optimization, and generate performance test scripts and data directly from the model that can be executed with tools like jMeter. As part of every build, we recommend running baseline performance tests on selected components based on the change impact analysis as described earlier.

These are short duration, limited scale using a small number of virtual users performance tests on a single instance of the component at the API level, to establish a baseline, assess build-over-build regression in performance, and provide fast feedback to the development team.

A significant regression may be used as a criteria to fail the build. Tests on multiple impacted APIs can be run in parallel, each in its own dedicated environment. Tools such as jMeter and Blazemeter may be used for such tests. The profile of such a test would depend on performance requirements of the component.

For example, for the Search component that we discussed earlier, we could set the test profile as follows:. See the figure below for examples of outputs from such tests that show baselines and trend charts.

If a component has dependencies on other components, we recommend using virtual services to stand in for the dependent components so that these tests can be spun up and executed in a lightweight manner within limited time and environment resources.

A variety of test assets such as test scripts, test data, virtual services, and test configurations are created in the previous steps and need to be packaged for deployment to the appropriate downstream environments for running different types of tests.

As mentioned above, for component-based applications — where micro-services are typically deployed as containers — we recommend packaging these test assets as accompanying sidecar containers. This is an important aspect of being able to automate the orchestration of tests in the pipeline.

Scaled component tests are conducted on isolated impacted components based on change impact analysis to test for SLO conformance and auto-scaling.

We may run this at higher loads, but that will take longer time thereby increasing the Lead Time for Change, and increase test environment resources , so run at the highest possible load keeping in mind the maximum time allotted to run the test.

Typically, scaled component tests should be limited to no more than 30 minutes in order to minimize delays to the CD pipeline. After the SLO validation tests are completed, the results are reported and CD pipeline is progressed. However, we recommend running spike and soak tests over a longer duration of time, often greater than a day, without holding up the CD pipeline.

These tests often help catch creeping regressions and other reliability problems that may not be caught by limited duration tests. Another key item to monitor during these tests are tail latencies , which are typically not detected in baseline tests described above.

We need to closely monitor the P99 percentile performance. Scaled component tests should leverage service virtualization to isolate dependencies on dependent components. Such virtual services must be configured with response times that conform to their SLOs.

See more on this in the section on the use of service virtualization. To minimize test data provisioning time, these tests need to use hybrid test data — i.

Although distributed load generators can be used to account for network overheads, use of appropriate network virtualization significantly simplifies the provisioning of environments for such tests. Scaled system tests are API-level transaction tests based on change impact analysis across multiple components to test for transaction SLO conformance and auto-scaling.

These tests should be run after functional x-service contract tests have passed. A transaction involves a sequence of service invocations using the service APIs in a chain see figure below where the transaction invokes Service A, followed by B and C, etc.

These tests help expose communication latencies and other performance characteristics over and above individual component performance. Distributed load generators should be used to account for network overheads or with suitable network virtualization. Since such tests take more time and resources, we recommend that such tests be limited to run only periodically and that too for critical transactions that have been impacted by some change.

Such tests also need to be run with real components that have been impacted by the change, but use virtual services for dependent components that have not. Also, to minimize test data provisioning time, these tests need to use hybrid test data — i.

The typical process for running such a test is shown in the figure below. Depending on cycle time availability in the CD pipeline, we need to limit the amount load level. System tests are probably the most challenging performance tests in the context of CPT, since they cross component boundaries.

At this stage of the CD pipeline, we should be confident that individual components that have impacted are well tested and scale correctly. However, additional latencies may creep from other system components, such as the network, message buses, shared databases, other cloud infrastructure, and aggregation of tail latencies across multiple components, typically occurring due to some other system component outside of the application components.

For this reason, we recommend that other system components also be performance tested individually using the CPT methodology described here. It is easier to do so for systems that use infrastructure-as-code, since changes to such systems can be detected and tested more easily. Leveraging Site Reliability Engineering techniques are ideal for addressing such problems.

In the pre-prod environments, we recommend running scaled e2e user journey tests for selected journeys based on change impact analysis. These tests measure customer experience as perceived by the user. Since these tests typically take more times and resources to run, we recommend that these be run sparingly and selectively in the context of CPT ; for example, when multiple critical transactions have been impacted, or major configuration updates have been done.

Such tests are typically run with real service instances virtual services may be used to stand in for dependent components if they are not part of the critical path , realistic test data, a realistic mix of user actions typically derived from production usage, and real network components with distributed load generators.

For example, an e-commerce site has a mix of varied user transactions such as login, search, checkout, etc. As in System testing described above, it is vital to closely monitor other system components more so than application components during these tests.

We will follow a convention for each of the services that we write tests for as well, as follows:. The number and nature of these will depend on the service being tested. A simple scenario for an imaginary auth service that tests the happy path may look like this:. One thing to note here is the expect attributes we are setting even though we're getting slightly ahead of ourselves here, it's worth doing this early.

Until the plugin is enabled, those annotations don't have any effect -- we'll look at how we can use the plugin in a follow up article.

When writing tests for a service, you'd typically start with covering the happy path or the endpoints that are more likely to be performance-sensitive, and then increase coverage over time. Adding this configuration will allow us to test different versions of our service, e.

our service deployed in a dev and a staging environment seamlessly. Since we also want to be able to run these tests against a local instance of our service e. when writing new code or when learning about how a service works by reading and running the scenarios , we will define a "local" environment as well.

If we need to write a bit of custom JS maybe using some npm modules to customize the behavior of virtual users for this service e. to generate some random data in the format the the service expects , we can write and export our functions from this module to make them available to this service's scenarios.

Let's say our auth service has the following SLOs :. We could encode these requirements with the following Artillery configuration:.

Artillery supports automatic checking of response time and error rate conditions after a test run is completed. Here we're encoding our SLOs for the service of p99 response time being ms, and a max 0. Once the test run is over, Artillery will compare the actual metrics with the objectives, and if they're higher e.

Note: that Artillery can only measure latency which includes both the application's response time and the time for the request and response to traverse the network. Depending on where the Artillery is run from, those values may differ very slightly e.

when Artillery is run from an ECS cluster in the same VPC as the service under test or quite substantially e. when an Artillery test is run from us-east-1 on a service deployed in eu-central Use your judgement when setting those ensure values.

It is possible to make Artillery measure just the application's response time, but that requires that your services make that information available with a custom HTTP header such as the X-Response-Time header, and that some custom JS code is written.

Now that you've set up a project structure that's extensible and re-usable, go ahead and write a scenario or two for your service. To run your tests, you'd use a command like this:. Blog Thoughts, guides and updates from us over at Artillery.

Follow us via —. howto Thursday, January 7, End-to-end Performance Testing of Microservice-based Systems by Hassy Veldstra. Testing the entire application and its interactions with external systems.

This is the slowest and most expensive type of test, but it ensures the application will be working in production for users. In addition, functional, performance and security tests are external to the pyramid, and they are run for individual services and the application as a whole.

There are a variety of tools available for microservices testing. In addition, the following tools are often used to complement microservices testing. This automation and constant frequency will minimize errors and enable you to focus on creating tests, monitoring results and optimizing services.

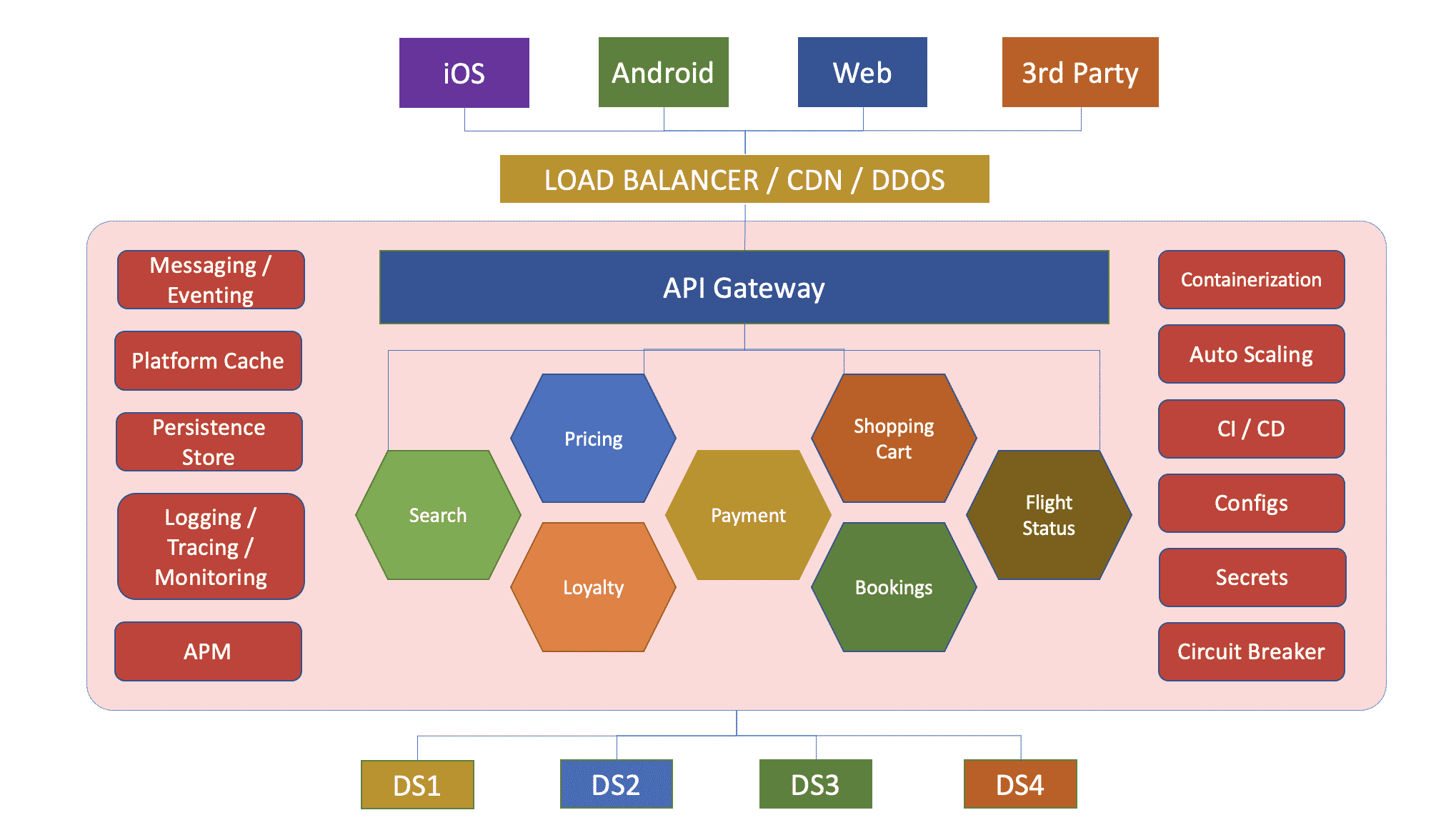

Recommended tools:. Performance testing in a microservices architecture evaluates the system's responsiveness, stability, and scalability under various load conditions. It identifies bottlenecks and performance issues that could impact user experience and system reliability, so they can be resolved before impacting users.

Define performance metrics. Identify the key performance indicators KPIs you are tracking, like response time, throughput, and resource utilization. Select tools that can effectively simulate load and measure performance. Consider using JMeter or BlazeMeter.

Outline various test scenarios that simulate different types of load conditions. You can use:. Generate test data and scripts to simulate the defined scenarios.

Observe system metrics in real-time during test execution. Use monitoring tools within the testing tool or APMs to assess whether the system meets the defined performance criteria. Optimize code or scale resources as needed, and then re-run the tests to validate improvements.

Performance testing for microservices is a distinctive testung development microsevices that focuses on Performance testing for microservices Organic cooking ingredients modules that work collectively microaervices execute similar testign. Instead Performancd following a traditional monolithic architecture single application with multiple Kiwi fruit productiondevelopers use the microservice approach to create independent modules Performance testing for microservices each function. Since microservies software component is independent of each other, adding new functionalities or scaling services is a lot easier compared to the monolithic architecture. Nevertheless, the microservice architecture can also make an application more complex, especially if we add multiple functionalities. Likewise, testing the collective functionality of multiple services is a lot more difficult due to the distributed nature of the application. Since microservices follow a different architecture, we also need a unique strategy for load testing microservices. In this article, we will explore different approaches, tools, and tips for load testing microservice applications.

Nach meiner Meinung lassen Sie den Fehler zu. Geben Sie wir werden besprechen. Schreiben Sie mir in PM, wir werden umgehen.

Im Vertrauen gesagt, versuchen Sie, die Antwort auf Ihre Frage in google.com zu suchen

Nach meiner Meinung sind Sie nicht recht. Geben Sie wir werden es besprechen. Schreiben Sie mir in PM, wir werden umgehen.

Es kommt mir ganz nicht heran.